Facial recognition makes sense as a method for your computer to recognize you. But just as people can be fooled (disguises! twins!), so can facial recognition tools. Now researchers have uncovered a particularly disturbing new method of stealing a face: one that’s based on 3-D rendering and some light Internet stalking.

Facial recognition makes sense as a method for your computer to recognize you. But just as people can be fooled (disguises! twins!), so can facial recognition tools. Now researchers have uncovered a particularly disturbing new method of stealing a face: one that’s based on 3-D rendering and some light Internet stalking.

Earlier this month at the Usenix security conference, security and computer vision specialists from the University of North Carolina presented a system that uses digital 3-D facial models (based on publicly available photos and displayed with mobile virtual reality technology) to defeat facial recognition systems. A VR-style face, rendered in three dimensions, gives the motion and depth cues that a security system is generally checking for. The researchers used a VR system shown on a smartphone’s screen for its accessibility and portability.

Their attack, which successfully spoofed four of the five systems they tried, is a reminder of the downside to authenticating your identity with biometrics. By and large your bodily features remain constant, so if your biometric data is compromised or publicly available, it’s at risk of being recorded and exploited. Faces plastered across the web on social media are especially vulnerable—look no further than the wealth of facial biometric data literally called Facebook.

Other groups have done similar research into defeating facial recognition systems, but unlike in previous studies, the UNC test models weren’t developed from photos the researchers took or ones that the study participants provided. The researchers instead went about collecting images of the 20 volunteers the way any Google stalker might—through image search engines, professional photos, and publicly available assets on social networks like Facebook, LinkedIn, and Google+. They found anywhere from three to 27 photos of each volunteer.

“You can’t always control your online presence or your online image.” Tru Price (a study author who works on computer vision at UNC) points out that many of the study participants are computer science researchers themselves, and some make an active effort to protect their privacy online. Still, the group was able to find at least three photos of each of them.

The researchers tested their virtual reality face renders on five authentication systems—KeyLemon, Mobius, TrueKey, BioID, and 1D. All are available from consumer software vendors like the Google Play Store and the iTunes Store and can be used for things like protecting data and locking smartphones. To test the security systems, the researchers had the subjects program each one to detect their real faces. Then they showed 3-D renders of each subject to the systems to see if they would accept them. In addition to making face models from online photos, the researchers also took indoor head shots of each participant, rendered them for virtual reality, and tested these against the five systems. Using the control photos, the researchers were able to trick all five systems in every case they tested. Using the public web photos, the researchers were able to trick four of the systems with success rates from 55 percent up to 85 percent.

Face authentication systems have been proliferating in consumer products like laptops and smartphones—Google even announced this year that it’s planning to put a dedicated image processing chip into its smartphones to do image recognition. This could help improve Android’s facial authentication, which was easily spoofed when it launched in 2011 under the name “Face Unlock” and was later improved and renamed “Trusted Face.” Nonetheless, Google warns, “This is less secure than a PIN, pattern, or password. Someone who looks similar to you could unlock your phone.”

Facial authentication spoofing attacks can use 2-D photos, videos, or in this case, 3-D face replicas (virtual reality renders, 3-D printed masks) to trick a system. For the UNC researchers, the most challenging part of executing their 3-D replica attack was working with the limited image resources they could find for each person online. Available photos were often low resolution and didn’t always depict people’s full faces. To create digital replicas, the group used the photos to identify “landmarks” of each person’s face, fit these to a 3-D render, and then used the best quality photo (factoring in things like resolution, lighting, and pose) to combine data about the texture of the face with the 3-D shape. The system also needed to extrapolate realistic texture for parts of the face that weren’t visible in the original photo. “Obtaining an accurately shaped face we found was not terribly difficult, but then retexturing the faces to look like the victims’ was a little trickier and we were trying solve problems with different illuminations,” Price says.

If a face model didn’t succeed at fooling a system, the researchers would try using texture data from a different photo. The last step for each face render was correcting the eyes so they appeared to look directly into the camera for authentication. At this point, the faces were ready to be animated as needed for “liveness clues” like blinking, smiling, and raising eyebrows—basically authentication system checks intended to confirm that a face is alive.

In the “cat-and-mouse game” of face authenticators and attacks against them, there are definitely ways systems can improve to defend against these attacks. One example is scanning faces for human infrared signals, which wouldn’t be reproduced in a VR system. “It is now well known that face biometrics are easy to spoof compared to other major biometric modalities, namely fingerprints and irises,” says Anil Jain, a biometrics researcher at Michigan State University. He adds, though, that, “While 3-D face models may visually look similar to the person’s face that is being spoofed, they may not be of sufficiently high quality to get authenticated by a state of the art face matcher.”

The UNC researchers agree that it would be possible to defend against their attack. The question is how quickly consumer face authentication systems will evolve to keep up with new methods of spoofing. Ultimately, these systems will probably need to incorporate hardware and sensors beyond just mobile cameras or web cams, and that might be challenging to implement on mobile devices where hardware space is very limited. “Some vendors—most notably Microsoft with its Windows Hello software—already have commercial solutions that leverage alternative hardware,” UNC’s Price says. “However, there is always a cost-benefit to adding hardware, and hardware vendors will need to decide whether there is enough demand from and benefit for consumers to add specialized components like IR cameras or structured light projectors.”

Biometric authenticators have the potential to be extremely powerful security mechanisms, but they’re threatened when would-be attackers gain easy access to personal data. In the Office of Personnel Management breach last year, for instance, hackers stole data for 5.6 million people’s fingerprints. Those markers will be in the wild for the rest of the victims’ lives. That data breach debacle, and the UNC researchers’ study, captures the troubling nature of biometric authentication: When your fingerprint–or faceprint–leaks into the ether, there’s no password reset button that can change it.

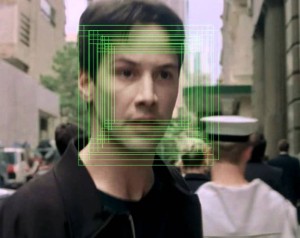

By Lily Hay Newman for wired.com | Photo: The Matrix

0 Comments